📋 The Outline For This Week

Why your firm doesn't need AI experts (it needs AI directors)

Two battle-tested workflows that save 10+ hours per week

The prompting techniques actually working in legal practice

PLUS 18+ FREE copy-paste-ready AI prompts for common legal workflows

Your Lawyers Don't Need to Code.

They Need to Direct AI.

The more I watch AI capabilities advance, the more confident I am that the real leverage isn't in knowing how to connect tools or staying on top of every AI release.

It's in understanding what AI can execute for you, knowing which questions need answering, and having enough knowledge of the legal AI ecosystem to orchestrate the tools toward the outcomes you need—without needing to understand how those tools work under the hood.

Recently, a 50-attorney firm told me they didn't think they could implement AI strategically because "none of our lawyers are technical."

I asked them a simple question: Do your associates write legal briefs, or do they write software that writes legal briefs?

The answer revealed the biggest misconception blocking law firm AI implementation today.

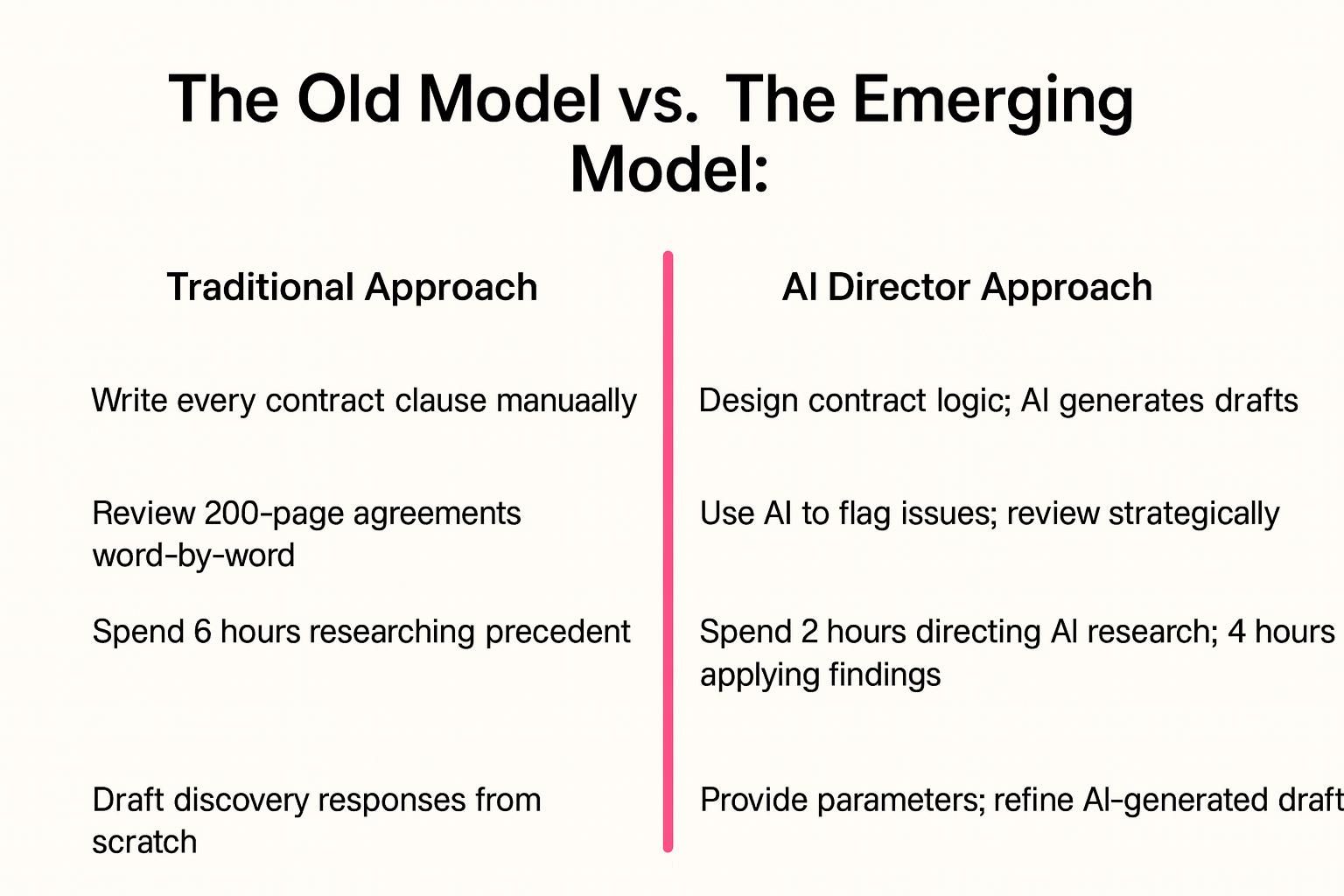

Here's what that misconception looks like in practice:

Most lawyers approach contract review by manually reading every clause, maybe asking ChatGPT to "summarize this section," then compiling findings themselves. They're using AI as a doer—giving it one small task at a time.

AI directors work differently. They provide a structured analytical framework upfront—what risks to flag, which provisions to compare, what protections to check—that guides AI through the full analysis. Then they spend their time verifying outputs and applying legal judgment, not doing the reading.

Same tool. Completely different approach.

You don't need programmers. You need professionals who can think strategically about what they want AI to execute, understand enough about available AI tools to choose the right one, and then craft instructions that get quality results.

That skill is closer to legal analysis than it is to AI 'vibe coding.'

I see law firms and in-house counsel alike who are stuck because they are seeking the wrong capabilities. They want people who understand APIs, workflow tools or how to fine-tune language models.

What they actually need are directors who can:

Identify which tasks AI should execute (contract clause drafting, risk flagging, document summarization)

Break down the desired outcome into clear instructions

Verify and refine the AI's execution with legal judgment

Lawyers already do this when they delegate to junior associates: define the task, specify what quality looks like, review the work, apply expertise to refine it.

AI direction is the same skill. Different syntax.

In this edition I want to share some practical ways ALL legal teams can leverage AI to get more done, without any fancy tools, development work, or technical know-how.

The Shift From Doer to Director

Here's what's changing in legal practice right now. For decades, junior lawyers spent years learning to "do" legal work: draft contracts, review documents, research case law. That work built judgment and expertise. It still matters.

But AI workflow automation in legal departments is creating a new layer. The most valuable skill in many contexts is becoming the ability to orchestrate AI to handle execution tasks—drafting clauses, flagging risks, summarizing documents—rather than executing every task yourself.

Think of it like a film director versus a camera operator. The director doesn't need to know how to operate every camera, adjust every lens setting, or understand the technical specs of equipment. They need to know what shot they want, which tools can capture it, and how to direct the crew to execute their vision.

Both roles require expertise, but they deploy it differently.

This doesn't mean lawyers become passive. It means they shift cognitive energy from executing tasks (typing every clause, reading every page word-for-word) to directing outcomes (designing the analytical framework, instructing AI on what to produce, verifying outputs, applying legal judgment to refine results).

In my experience, adoption tends to work best when you meet this reality head-on rather than pretending AI is just a research assistant.

The opportunity here is not about replacing lawyers. It's about freeing them to do more high-value work per week than was previously possible.

When a senior associate can produce quality output in 3 hours instead of 8, your firm doesn't cut that associate. You take on more clients or pursue more complex matters.

Workflow 1: AI Contract Review for Accuracy and Speed

Let me show you a specific workflow that demonstrates prompt engineering for legal professionals in action. This is for contract review in business law contexts, though you can adapt the framework to other practice areas.

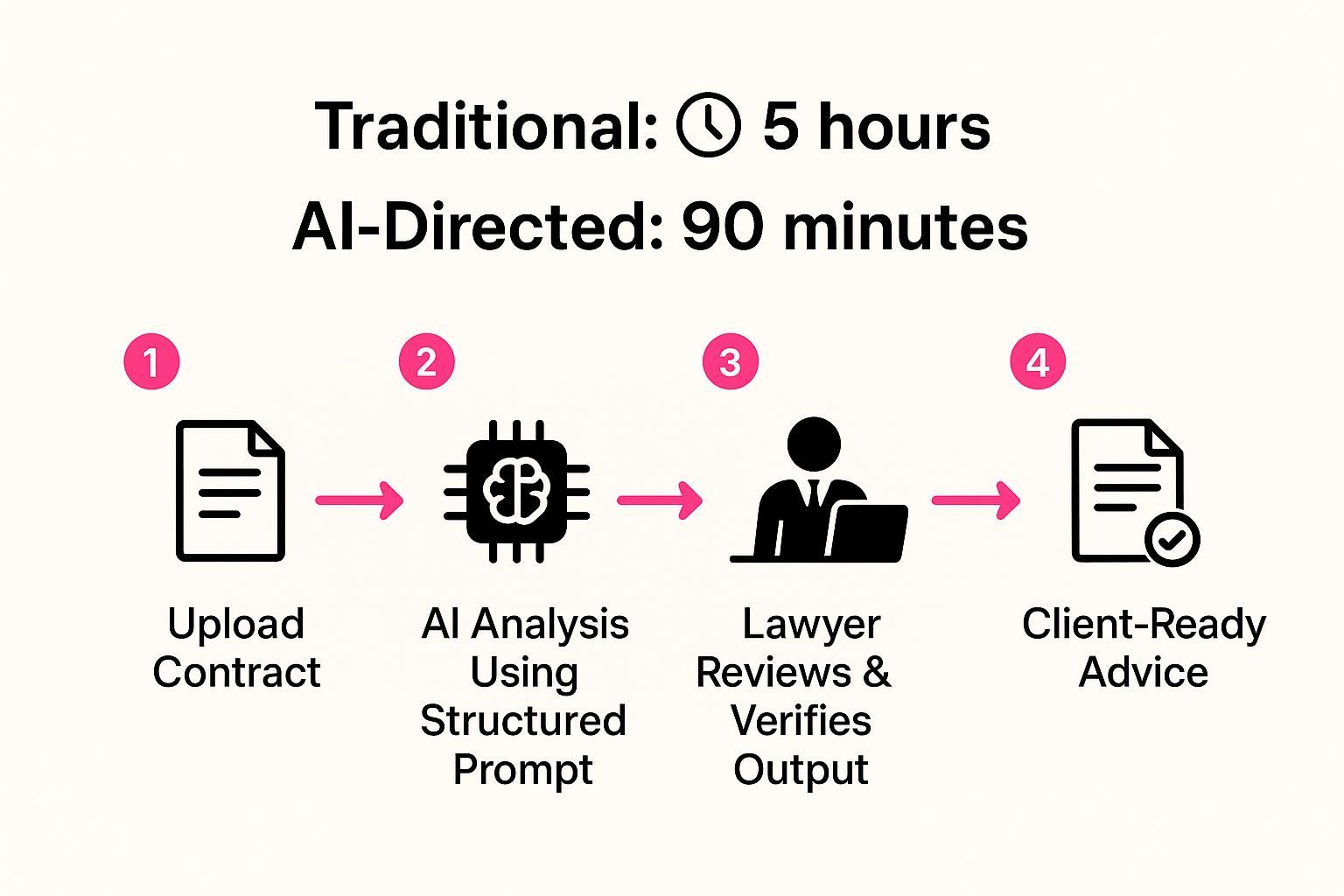

The Traditional Process:

Your lawyer receives a 40-page vendor agreement. They spend 4–5 hours reading every section, manually comparing terms to your firm's standard positions, highlighting issues in Track Changes, and drafting a memo. This happens 2–3 times per week. That's 12–15 hours weekly on contract review alone.

The AI-Directed Process:

The associate uploads the contract to Claude or ChatGPT with a carefully structured prompt. Here's the template many firms are finding effective:

"You are a business law attorney reviewing a vendor services agreement. Analyze this contract and provide:

Risk Assessment: Identify any terms that deviate from standard market practice in 𝑗𝑢𝑟𝑖𝑠𝑑𝑖𝑐𝑡𝑖𝑜𝑛jurisdiction for vendor agreements, particularly around indemnification, limitation of liability, and termination rights.

Client Protection Analysis: Flag any provisions that could disadvantage the client, especially regarding data ownership, confidentiality, and dispute resolution.

Missing Protections: Note any standard protections typically found in vendor agreements that are absent from this draft.

Comparison to Benchmark: Compare key commercial terms (pricing structure, payment terms, renewal provisions) to typical terms in similar agreements.

Organize your response in a numbered list with specific section references."

This is called chain of thought prompting in legal contexts. You're not asking AI to "review this contract." You're giving it a structured analytical framework, the same way you would brief a junior associate. The AI follows that reasoning path.

What Happens Next:

In 90 seconds, the AI returns a structured analysis. Your associate doesn't accept it as final work. They review the AI's findings, verify section references, apply their judgment about which issues matter most for this specific client, and draft client-facing advice. Total time: 1.5–2 hours instead of 4–5.

The Law Firm AI ROI:

If this saves 3 hours per contract, and your firm reviews 3 contracts weekly, that's 9 hours saved. At a 300/hour billing rate, you've created capacity worth 2,700 per week, or roughly 140,000 annually. And your associate spent that reclaimed time on work that actually requires human judgment: client strategy, negotiation, relationship building.

The key insight: Your associate isn't outsourcing thinking to AI. They're using AI to accelerate the mechanical parts of analysis so they can focus cognitive energy on what genuinely requires expertise.

FREE AI LEGAL PROMPTS

Get 18 copy-paste AI prompts for contract review, discovery, research, and drafting - organized by practice area with advanced prompting techniques included.

Workflow 2: Discovery Document Summarization Using Strategic Prompts

Here's another workflow that demonstrates legal AI prompting in litigation contexts: discovery document summarization.

The Traditional Process:

Your litigation team receives 150 documents in discovery. An associate spends 8–10 hours in total reading through the documents, extracting key facts, identifying potential evidence, and creating a summary memo. This is essential work, but it's brutally time-consuming.

The AI-Directed Process:

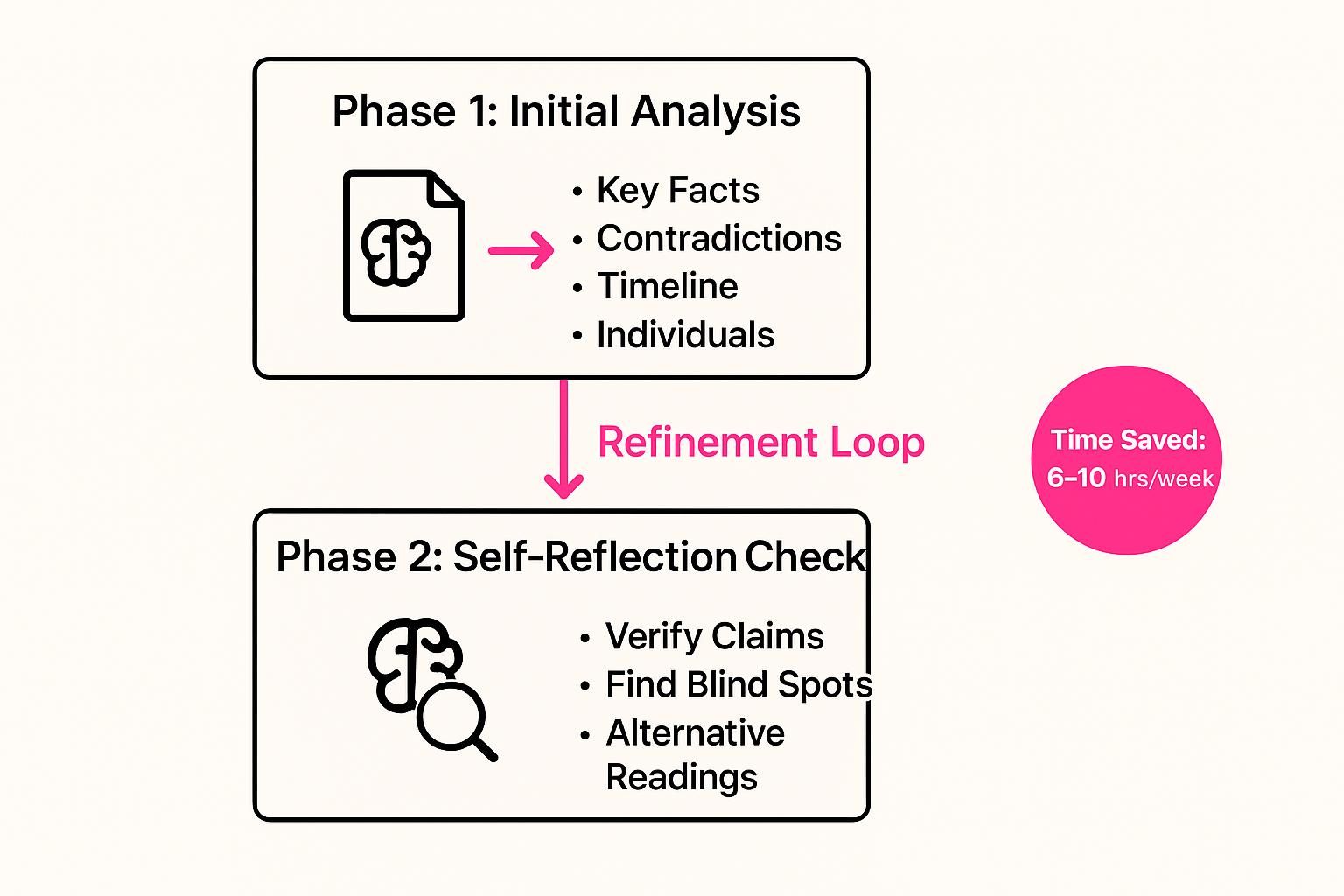

Your associate uses a technique called self-reflection prompting. This is where you ask AI to analyze a document, then ask it to critique its own analysis. It sounds strange, but research shows this often improves output quality.

Step 1 – Initial Analysis Prompt:

"You are a litigation attorney reviewing discovery documents in a commercial dispute involving <𝑏𝑟𝑖𝑒𝑓 𝑐𝑎𝑠𝑒 𝑑𝑒𝑠𝑐𝑟𝑖𝑝𝑡𝑖𝑜n>. Read the attached email chain and provide:

Key factual assertions made by each party

Any statements that contradict other evidence we've collected

Potential impeachment material

Timeline of events referenced

Individuals mentioned and their roles

Step 2 – Self-Reflection Prompt:

"Review your previous analysis. Identify:

Any factual claims you made that aren't directly supported by the document text

Alternative interpretations of ambiguous statements you may have missed

Contextual information that would be necessary to fully assess this document's significance

Any assumptions you made about party intent or meaning"

This two-step process tends to produce more thorough results than a single prompt. The AI catches its own oversights or unsupported inferences in the second pass.

What Happens Next:

Your associate reviews both outputs, verifies citations, cross-references with other discovery materials, and creates the final summary with strategic insights about how to use the document. Time spent: 3–4 hours instead of 8–10.

The Pattern:

Notice what both workflows have in common. The lawyer isn't asking AI to "summarize this" or "review that." They're providing a structured analytical framework that mirrors how they'd brief a colleague. They're directing the AI's reasoning process, then applying human judgment to verify and refine the output.

This is AI for legal research efficiency in practice.

You're not replacing the associate's expertise. You're amplifying it.

And the firms adopting these approaches are finding they can handle larger caseloads with the same team size, or deliver faster turnarounds at the same quality level.

If you automate chaos, you get faster chaos.

Quick Hits

Thomson Reuters Releases AI Readiness Assessment for Law Firms

Thomson Reuters published a new framework this week for evaluating law firm readiness for AI integration. The assessment focuses on strategy, technical infrastructure, team capabilities, and ethical compliance—giving managing partners a diagnostic tool to identify gaps before deployment. The resource addresses the critical shift from "should we adopt AI" to "what needs to be in place first."

Read the full report →

Reuters Legal: Five-Step Framework for Corporate Legal AI Adoption

Reuters Legal published a practical implementation framework specifically designed for legal departments navigating AI adoption. The article acknowledges that AI adoption has "reached a tipping point" and provides structured guidance on vendor evaluation, workflow integration, change management, and ROI measurement. The emphasis: successful adoption isn't about hiring data scientists—it's about giving existing teams a clear playbook.

View the article →

The Bottom Line

Your firm doesn't necessarily need a Chief AI Officer.

It doesn't need lawyers who master every AI tool.

It needs lawyers who can become AI directors—professionals who think clearly about what they want AI to execute, structure those instructions like they'd brief a junior associate, and apply legal judgment to verify the results.

That's a skill you can teach in weeks, not years.

And it's the difference between firms that are "exploring AI" and firms saving 6-10 hours per week per lawyer on routine work like contract review and discovery.

Start with one of the workflows above. Train two people. Measure the time saved. Then expand.

Have a great week, and please share any feedback you have below.

— Liam Barnes

P.S. Want us to run a Gemini, Claude or ChatGPT workshop for your team?

Grab time below to chat.

How Did We Do?

Your feedback shapes what comes next.

Let us know if this edition hit the mark or missed.

Too vague? Too detailed? Too long? Too Short? Too pink?