📋 In Today's Issue

Welcome to Edition 003 of The AI Law.

What a "Harvey‑class" legal copilot actually does (and costs)

The 80/20 DIY blueprint using inexpensive off‑the‑shelf tools

Build vs buy decision matrix—with real TCO numbers

Free download: DIY Legal Copilot Roadmap: The $15K Blueprint

Welcome Back

Last week a Managing Partner asked me, "Can we just build our own Harvey? I'm tired of waiting for vendor contracts and watching other firms get ahead."

Fair question. You've seen the headlines—firms deploying AI copilots that draft memos, extract clauses from 500-page agreements, and answer research questions in seconds.

You've sat through demos where vendors promise "Harvey-class" capabilities.

And you're wondering: could we build something like that in-house for less?

Short answer: yes. But with caveats.

This week I'm walking you through what it actually takes to DIY a legal copilot—the architecture, the costs, the gotchas—and giving you a partner-ready decision matrix so you can figure out whether build vs buy makes sense for your firm.

Lessdoit.

Managing partners at 2 AM after Googling 'how to build your own Harvey’

What a "Harvey-Class" Legal Co-pilot Actually Does…and what it costs

Before you decide whether to build or buy, you need to understand what you're actually buying when a vendor sells you a "legal copilot."

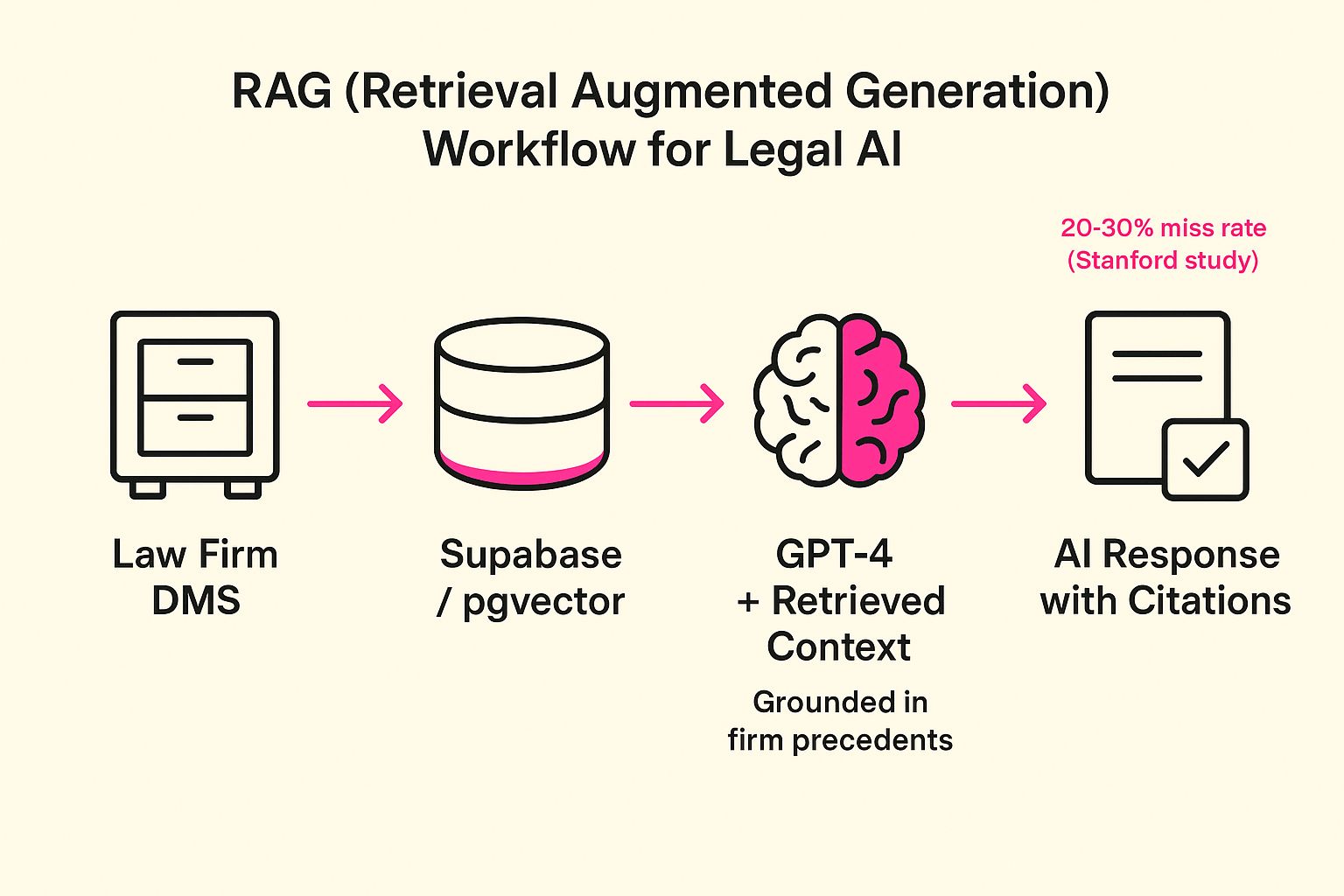

Strip away the marketing and here's what these tools do: they take your firm's documents, break them into searchable pieces (think: cutting a book into chapters), store them in a vector database (a specialized filing cabinet that understands meaning, not just keywords), and use something called RAG—retrieval-augmented generation—to ground AI responses in your actual files instead of letting the model make things up.

RAG is basically Google Search for your law firm documents, but smarter. When you ask, "What defenses did we raise in the Smith case?" the system isn't guessing from GPT-4's memory.

It's searching your DMS, finding the relevant motion, pulling the defense section, and handing that context to the AI so the answer comes back with page-level citations to your work product.

That's the difference between "AI that sounds confident" and "AI you can trust in a partner meeting."

Here's why that matters. Without RAG, you're just using ChatGPT with a legal-sounding prompt. With RAG, you're querying a system that knows your precedents, your case strategies, and your clients—because it's indexed every document in your DMS and can retrieve the right ones in real time.

Stanford just published a study showing that even the best RAG systems miss critical information 20–30% of the time when tested on complex legal queries.

They tested four leading platforms (two legal-specific, two general) and found that while RAG dramatically improved accuracy over raw GPT-4, none of the systems caught every relevant clause in multi-document scenarios.

That's not a reason to avoid RAG. It's a reason to understand what you're building—and why supervision matters.

So what does a legal copilot actually do with this architecture?

Contract analysis: Upload a 60-page agreement, ask "What are the indemnification obligations?" and get an answer with page-level citations in 15 seconds instead of spending 45 minutes reading the whole thing.

Legal research: Query your firm's past work product for similar fact patterns, relevant motions, or successful arguments—without manually searching iManage for two hours.

Document drafting: Pull clause language from vetted precedents instead of starting from scratch or hoping the associate remembered the right template.

Due diligence: Ask "Which contracts have change-of-control provisions?" across 300 documents and get a list in minutes instead of days.

That's the promise. But here's what vendors don't tell you: these capabilities live or die based on three things that have nothing to do with the AI model.

First: document quality and structure. If your DMS is a mess—inconsistent file naming, no metadata, scanned PDFs with bad OCR (Optical Character Recognition) — RAG will struggle to find the right documents no matter how good the retrieval algorithm is.

Second: integration with your existing tools. If your copilot can't pull documents directly from your DMS or push drafted clauses back into Word without manual copy-paste, adoption will stall at approx. 12% (based on research) because no one wants to learn a separate workflow.

Third: human-in-the-loop governance. Even the best RAG systems need review. Stanford found that precision (not returning irrelevant docs) was high, but recall (finding all the relevant docs) lagged.

That means you need a workflow where associates verify completeness, not just accuracy.

Now let's talk cost. Harvey’s prices are behind a sales person, but lets go with the rough estimate of $1,000–$1,200 per seat per month—that's $12K–$15K annually per user.

For a 50-lawyer firm that wants to give 20 associates access, you're looking at $240K–$300K annually, and costs may climb 30–40% with premium content bundles or enterprise features - as is all too common in this category of SaaS..

That's the buy side. What does build look like?

The 80/20 DIY Blueprint Using Inexpensive Off-the-Shelf Tools

Here's the truth: you can build an 80%-capable legal copilot for under $30K in year-one costs if you're willing to accept some technical debt and limited scalability.

You won't match Harvey's polish, but you'll get document search, clause extraction, and precedent retrieval working in 8–12 weeks.

Before I walk you through the pieces, here's what you need to know: I'm about to throw some tool names and technical terms at you.

You don't need to become an expert—this is the brief you hand your IT team or a contractor.

I'll explain each piece in plain English as we go. What matters is understanding how this works and what's needed so when you talk to a technical person, you're on the same page.

Here's the stack that actually works for mid-sized firms without dedicated AI engineers.

Component 1: n8n for workflow automation. Think of this as the air traffic controller for your documents. n8n connects your DMS (iManage, NetDocuments, SharePoint) to your vector database and lets you build workflows without writing code.

When a new contract gets uploaded to your DMS, n8n automatically breaks it into chunks, generates embeddings (numerical representations the AI can search), and stores them—no human babysitting required.

Cost: Self-hosted is free. Cloud-hosted starts at $20–$50/month depending on workflow volume.

Component 2: Supabase for your vector database. This is where you store all those searchable document pieces. Think of it as a smart filing cabinet that understands meaning, not just keywords.

Supabase is an open-source tool with built-in vector search (the tech is called pgvector, but you don't need to remember that). When someone asks a question, the system searches this database for the most relevant chunks and hands them to the AI.

Why Supabase instead of fancier options?

Two reasons. First, it's cheaper—free for prototypes, $25/month for production workloads under 8GB. Second, your IT team probably already knows the underlying tech, which means fewer headaches for backups, permissions, and troubleshooting.

Cost: $25–$100/month depending on document volume.

Component 3: OpenAI or Anthropic for your LLM. You're not training a custom AI model (that costs millions). You're renting one via API—like paying for cloud storage instead of buying your own servers.

When someone asks, "What indemnification language did we use in the Acme deal?" your system retrieves the relevant document chunks from Supabase, passes them to GPT-4 or Claude, and the model generates an answer grounded in your firm's precedents.

Cost: $0.01–$0.03 per 1,000 tokens (roughly 750 words).

For a 50-lawyer firm running 500 queries per week, expect $200–$500/month.

Component 4: DMS connectors. This is the hard part—and where internal IT saves your budget. You need to extract documents from your DMS, process them (OCR if necessary), chunk them into 500–1,000 word segments, and generate those embeddings I mentioned.

n8n can handle the orchestration, but you'll need a developer to write custom connectors if your DMS doesn't have a clean API.

For iManage, NetDocuments, or SharePoint, expect 40–60 hours of developer time to build reliable extraction workflows. For legacy systems without APIs, double that.

Cost: $6,000–$12,000 one-time (assuming $150/hour contractor rates).

Component 5: A simple front-end. You don't need a fancy UI. A Slack bot or Microsoft Teams integration works fine for prototypes.

Users type questions in Slack, your system queries the vector database, retrieves relevant chunks, sends them to GPT-4, and returns the answer—all in 5–10 seconds.

For a more polished interface, use Retool or Bubble to build a web app where users can upload documents, run searches, and view citations.

Cost: $0 (Slack/Teams- free version with limited features) to $2,000–$4,000 (Retool/Bubble setup).

Total year-one build cost: $8,000–$17,000 for a working prototype.

Add another $5,000–$10,000 annually for ongoing API costs, hosting, and maintenance.

That's 85–95% cheaper than buying Harvey for 20 seats.

But here's what you're not getting: enterprise-grade security, multi-jurisdiction compliance certifications, dedicated support, pre-built integrations with 50+ legal tools, and a vendor who takes liability if something goes wrong.

You're also not getting automatic updates when OpenAI ships GPT-5, or when retrieval algorithms improve, or when new compliance requirements drop.

That's on you to maintain (or a partner like the one mentioned at the end of this newsletter).

And here's the gotcha most firms miss: time to value.

Yes, you can build this for $15K in cash costs. But it will take 8–12 weeks of part-time developer work, 20–30 hours of your time scoping requirements, and another 40–60 hours testing and refining retrieval quality.

If you're a managing partner billing at $500/hour, that's $30K–$50K of your time—which erases most of the cost savings.

That's why the build vs buy decision isn't just about price. It's about time, risk, and who owns the liability when something breaks.

Build vs Buy Legal AI: The Partner-Ready Decision Matrix

Here's how to decide.

The build case makes sense if three things are true:

First, you have internal technical capacity—either a developer on staff or budget to hire a contractor for 3–6 months.

Second, you're comfortable owning the maintenance and governance—someone needs to monitor retrieval quality, update connectors when your DMS changes, and retrain the system as your document corpus grows.

Third, you have a clear, narrow use case—like "search our M&A precedents" or "extract key terms from NDAs"—instead of trying to build a general-purpose research assistant.

If those three things are true, build can save you $40K–$80K annually and give you more control over your data.

The buy case makes sense if any of these are true:

You need enterprise-grade security and compliance certifications (SOC 2, ISO 27001, data residency guarantees).

You want integrations with your existing tools—DMS, billing, CRM—without custom development.

You need fast time-to-value—vendor pilots run in 2–4 weeks, builds take 8–12.

You don't have internal technical resources to own ongoing maintenance.

Or you want a vendor who takes liability if the system returns incorrect legal advice.

Most mid-sized firms should buy. Here's why.

The hard part of legal AI isn't the technology—it's the governance.

You need audit logs showing who queried what, when, and whether a human reviewed the output before it went to a client.

You need role-based permissions so associates can't access partner-only work product.

You need compliance with your malpractice carrier's AI usage requirements.

And you need a vendor who will testify in court if opposing counsel alleges your AI tool missed a critical clause.

Vendors charge $1,000+ per seat per month because they're selling insurance as much as software.

But there's a third option most firms ignore: the hybrid approach.

Start with a build for a single, high-value use case—like "search our litigation precedents for summary judgment briefs."

Use that pilot to prove ROI, test retrieval quality, and train partners on AI-assisted workflows.

Then, once you've validated the value, buy a vendor solution and migrate your data.

This gives you two wins.

First, you get a working system in 8–12 weeks without waiting for vendor sales cycles.

Second, you go into vendor negotiations knowing exactly what features matter because you've already built a version—which means you'll negotiate better pricing and avoid paying for capabilities you won't use.

Here's the decision matrix.

If your firm has fewer than 30 lawyers, buy—you don't have the scale to justify custom development.

If you have 30–100 lawyers and internal IT resources, pilot a build for one practice area, then buy for firm-wide rollout.

If you have 100+ lawyers and a CTO, build core infrastructure but buy specialized modules (like contract analysis or e-discovery) where vendors have deep domain expertise.

One more thing.

Whichever path you pick, start with baseline metrics. Measure how long it currently takes associates to find precedent language, extract clauses from contracts, or research a specific legal question.

Capture time-per-task for 10–15 typical queries. Then measure again at 30, 60, and 90 days post-launch.

That's how you prove ROI to partners who think AI is hype.

You show them a one-pager that says, "We used to spend 2.3 hours per contract review. Now we spend 1.4 hours.

That's 36 hours saved per associate per month, which is $18K in additional billable capacity per associate annually."

Numbers beat promises. Every time.

DIY Legal Copilot Roadmap: The $15K Blueprint

(FREE Download)

The step-by-step technical roadmap to build your own 'Harvey-class' legal copilot in 8–12 weeks—or hand it to a contractor as a ready-to-execute brief.

"We see the wave coming. By this time next year, every company has to implement it—not just have a strategy. Implement it."

Some related articles to this week’s content

Stanford study finds legal AI accuracy gaps: Researchers tested four leading RAG-based legal research platforms and found recall rates (finding all relevant information) lagged precision (avoiding irrelevant results) by 15–25 percentage points. The takeaway? Even the best systems need human verification, especially for high-stakes work.

Read the study →

Microsoft Copilot challenges for law firms: Legal tech analysis highlights integration friction, data governance concerns, and partner adoption barriers as top challenges for firms deploying Copilot in contentious work. Early adopters report 3–6 month onboarding timelines even for firms already using Microsoft 365.

Read the analysis →

Build vs buy frameworks evolve for legal AI: New guidance from legal tech consultants emphasizes a third path—hybrid models where firms build core infrastructure but buy specialized modules. The shift reflects growing recognition that one-size-fits-all vendor solutions miss firm-specific workflows.

Read the guidance →

That's a Wrap

That's it for this week. I hope this gave you a clearer picture of what it actually takes to build a legal copilot—and whether it makes sense for your firm.

If you're planning a build-or-buy decision this quarter, grab the DIY Legal Copilot Roadmap above.

Got a build vs buy question I didn't cover? Reply and tell me. I read every response.

See you next week.

— Liam Barnes

P.S. Want our expert team to custom build your very own version of Harvey? We provide AI consultancy and development for law firms. Grab time for an introductory call.

Visit www.cyberaktiveai.com

How Did We Do?

Your feedback shapes what comes next. Let us know if this edition hit the mark or missed.