📋 In Today's Issue

Welcome to Edition 002 of The AI Law.

Why 78% of law firms avoid AI (and what the smart 22% know)

The three fatal mistakes killing AI pilots before they launch

An 8-week roadmap that works for mid-sized firms

Free download: Your AI Pilot Scorecard to stress-test any project before you commit

Welcome Back

Last week we walked through contract review automation—the tools, the workflow, the ROI calculator.

A bunch of you asked the same thing: “This looks great, but how do I know if we’re ready? Our last tech project died after three months.”

That’s what this edition is about.

Across the industry, firms are hitting the same wall. Not because the tech doesn’t work. Not because partners don’t see the value.

But because most firms skip the three foundational steps that separate pilots that scale from pilots that die quietly in a Slack channel no one checks anymore.

I’m going to show you what those steps are, why they matter more than the tool you pick, and give you an 8‑week framework you can use this quarter to avoid becoming another cautionary tale.

Let’s get this show on the road.

Liam Barnes

Why 78% of Law Firms Avoid AI Adoption (Hint: It's Not the Tech)

It’s not 2019 anymore. Every mid‑sized firm has a partner who’s played with ChatGPT for drafting or tried Harvey for research. Most have sat through a demo that promised 40% time savings and “seamless integration.”

So why do 78% of firms still avoid meaningful AI adoption?

Because last time it went like this: Someone bought a tool. IT set it up. A few associates used it for two weeks. Then everyone went back to Word templates and manual research because the tool didn’t fit the workflow, no one tracked whether it saved time, and the partner who championed it got pulled into a big trial and forgot about it.

That’s not an AI problem. That’s a pilot design problem.

The 22% who actually scale AI do three things differently before they ever sign a contract. They run a structured evaluation to pick the right process. They set baseline metrics so they can prove ROI later. And they get partner buy‑in by showing numbers, not features.

Here’s what happens when you skip them. You pick a process because it sounds impressive, not because it’s measurable. You don’t capture time‑per‑task, so six months later you can’t prove the pilot worked. And you assume partners will adopt the tool because “it’s obviously better,” then watch adoption stall at 12% because no one made the case for why it matters to their billings.

I’ve seen this play out at firms with 40 associates and firms with 120. The pattern is identical. Enthusiasm at kickoff. Confusion at month two. Silence at month four. Then the tool becomes shelfware, and the next time someone suggests AI, the managing partner says, “We tried that. It didn’t work.”

The firms that avoid this don’t have better technology.

They have better process. And that process starts with understanding the three places AI pilots go to die.

The Three Fatal Mistakes That Kill AI Projects in Legal Practices

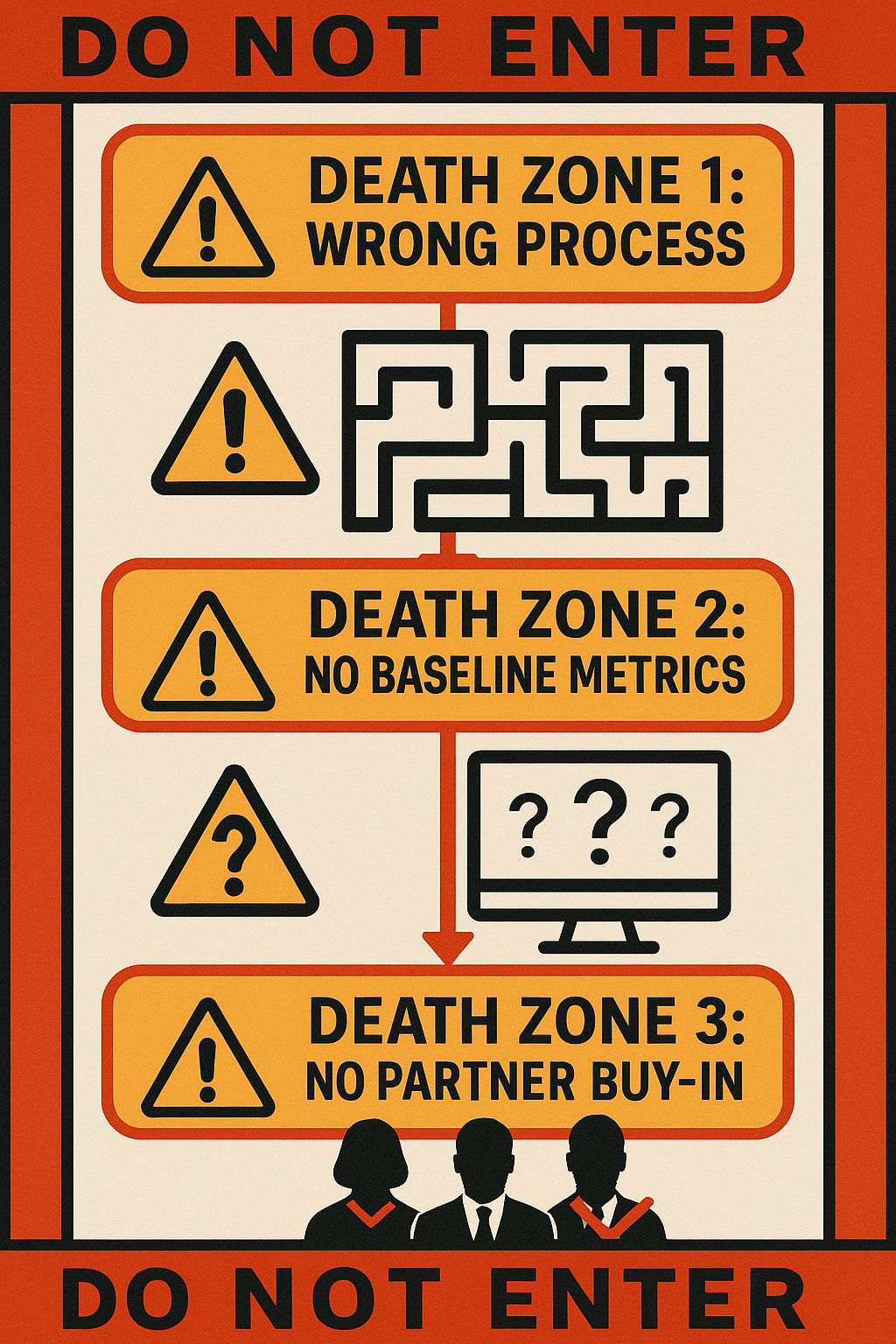

I call these the Three Death Zones. If your pilot hits even one, your odds of scaling drop to near zero. Hit all three and you’re not running a pilot—you’re running an expensive experiment that ends in a budget meeting where someone asks, “Why did we spend $60K on this again?”

Death Zone 1: You Pick the Wrong Process

Most firms pick their first AI pilot based on gut feel or a vendor suggestion. “Legal research sounds like a good fit.” “Everyone hates document review—let’s automate that.”

Here’s the problem. If the process isn’t standardized, measurable, and high‑volume, AI can’t prove ROI fast enough to build momentum. You need to be able to say, “We used to spend 4.2 hours per contract. Now we spend 2.8. That’s 336 hours saved across 40 associates per quarter.”

If you pick a process where time varies wildly, it only happens twice a month, or three departments do it three different ways, you’ll never get clean data. And without clean data, you can’t prove value. And without proof of value, you can’t get budget for the next phase.

The fix is simple. Before you pick a process, score it on three criteria: standardization (does everyone do it the same way?), frequency (does it happen often enough to matter?), and measurability (can you capture time‑per‑task today?). If a process scores low on any of those, it’s the wrong first pilot. Save it for phase two.

Death Zone 2: No Baseline Metrics

This is the most common mistake—and it’s fatal. You launch the pilot, everyone agrees it “feels faster,” but six months later when the CFO asks for proof, you’ve got nothing. No before‑and‑after comparison. No time saved per associate. No impact on realization rates or write‑downs.

Law firm AI adoption barriers aren’t technical—they’re cultural and operational. And without baseline metrics, you can’t overcome the cultural skepticism that kills adoption. Partners need proof, not promises. “This tool is great” doesn’t get budget. “This tool saved us 340 billable hours in Q3 and lifted realization by 3 points” does.

The fix is non‑negotiable. Before you flip the switch on any AI tool, measure your current state. Time per task. Error rate. Client turnaround time. Realization for that process. Whatever metric matters most to your CFO—capture it now. Then measure again at 30, 60, and 90 days post‑launch. That’s how you build an undeniable business case for phase two.

Death Zone 3: No Partner Buy‑In Strategy

Here’s the hard truth. If your top three billing partners don’t use the tool, no one else will either. Associates take their cues from partners. If partners keep drafting memos the old way, associates will too—no matter how much faster the AI tool is.

Most firms assume buy‑in happens automatically. “The tool is obviously better, so people will use it.” That’s not how behavior change works. Partners are busy, skeptical of tools that might create risk, and protective of workflows that already work for them.

Treat partner buy‑in like a sales process. You need a champion partner who will pilot it publicly, track their time savings, and share results at the next partner meeting. Show ROI in their language—billable hours saved, realization lift, client satisfaction. Remove friction by integrating the tool into their existing workflow, not asking them to learn a whole new system.

If you skip this step, your pilot will have 20% adoption, zero momentum, and a managing partner who quietly kills it at the next budget review.

These death zones are predictable—and avoidable. The firms that scale AI don’t skip these steps—they bake them into an 8‑week roadmap that front‑loads the hard work so the pilot has a real shot at success.

8-Week AI Implementation Roadmap for Mid-Sized Law Firms

This is the framework I’ve seen work at firms with 30 associates and firms with 90. It’s not fast. It’s not sexy. But it works because it forces you to answer the hard questions before you sign a vendor contract.

Weeks 1–2: Process Evaluation & Selection

Your goal is to pick the right first process using a structured scorecard. You’re evaluating three things: standardization, measurability, and volume.

Standardization means everyone does it the same way. If your litigation team reviews contracts differently than your corporate team, that’s low standardization. Measurability means you can capture time‑per‑task today and prove improvement later. Volume means it happens often enough to generate meaningful time savings—at least weekly, ideally daily.

Score each candidate process on those three criteria (1–5 scale). Anything below 12 out of 15 is a bad first pilot. You’re looking for quick wins that can demonstrate ROI in 90 days, not transformative projects that take 18 months to show value.

This is also where you identify your internal champion. You need one partner willing to pilot the tool publicly, track results, and evangelize it to the rest of the partnership. If you can’t find that person, your pilot is already in trouble.

Weeks 3–4: Baseline Metrics & Current‑State Mapping

This is the step most firms skip. Don’t. Capture your current performance on the process you’ve selected. Time per task. Error rate. Client turnaround time. Realization. Whatever metric matters most to proving ROI.

Map the current workflow step‑by‑step so you understand where AI fits and where humans still own the work. This is critical for integration later. If you don’t know the current workflow, you can’t design the new one without creating chaos.

At the end of week four, you should have a one‑pager that says: “Today, we spend 4.2 hours per contract review. Our target is 2.8. We’ll measure this weekly for 90 days post‑launch.”

Weeks 5–6: Vendor Evaluation & Integration Planning

Now you’re ready to talk to vendors. But you’re not buying based on features. You’re buying based on integration feasibility, support quality, and alignment with your baseline metrics.

Ask every vendor: Can this tool integrate with our DMS? Can it export time‑saved data in a format we can track? What does onboarding look like, and how much internal IT time will it require? What’s your average time‑to‑value for firms our size?

Build your integration plan. How will documents flow from intake into the AI tool? Where do exceptions route for human review? How do we capture time entries so billing stays clean? This is unglamorous work—but it’s the difference between a tool that gets used and a tool that creates more work than it saves.

Weeks 7–8: Pilot Launch, Training & Early Monitoring

You’ve picked the process. You’ve set baselines. You’ve selected the vendor and mapped integration. Now you launch—only with your champion partner and a small pilot group (5–10 people).

Your goal in weeks seven and eight isn’t perfection. It’s learning. Watch where people get stuck. Capture early time‑saved data. Gather feedback on what works and what creates friction. Adjust the workflow in real time based on what you learn.

At the end of week eight, do a checkpoint review. Are we hitting our time‑saved target? Is adoption where we expected it? What blockers have we hit—and how do we fix them? If the pilot works, expand to the next group. If it doesn’t, pause and diagnose before you scale a broken process.

This 8‑week roadmap won’t make you the fastest firm to adopt AI. It will make you the firm that actually scales it, proves ROI, and builds momentum for phase two—instead of becoming another cautionary tale about why “AI doesn’t work in legal.”

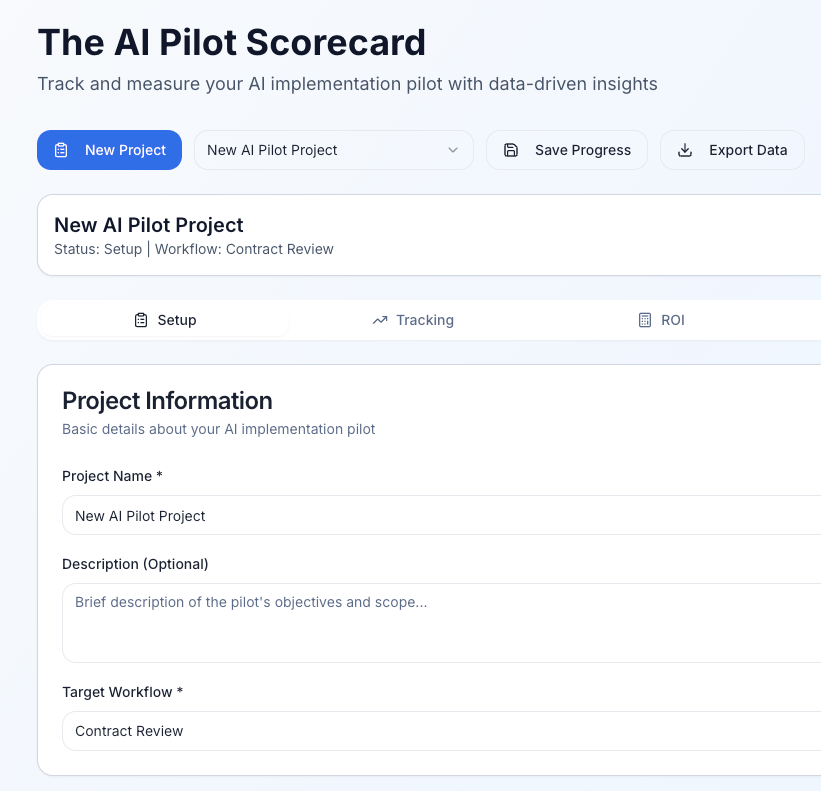

Want to stress‑test your next AI pilot before you commit?

Download the free AI Pilot Scorecard—it’s the same evaluation framework mid‑sized firms use to pick processes that deliver ROI in 90 days.

What Else is Happening???

Thomson Reuters releases 2025 Future of Professionals Report: Law firms with a formal AI strategy are 3.9x more likely to see measurable benefits than firms with no plan. The top barrier? “Inability to demonstrate ROI to partners.” The clock is ticking. Read the full report →

Harvey announces integration with iManage: The partnership lets users access and return documents directly within iManage, reducing manual uploads and protecting against data leakage. Early adopters report smoother workflows and better document security. Read the announcement →

Canadian Law Societies update AI ethics guidance: Ontario, Alberta, Manitoba, BC, and Saskatchewan now require verification processes for AI‑assisted work, audit logs, and documented supervision protocols for client deliverables. Non‑compliance can trigger practice reviews. Read the guidance summary →

“Generative AI hallucinations remain pervasive in legal filings — verification isn’t optional.”

That's the Wrap

That’s it for this week. I hope this gave you a clearer picture of why most AI pilots fail—and how to make sure yours doesn’t.

If you’re planning an AI pilot this quarter and want to stress‑test your process selection before you commit budget, grab the AI Pilot Scorecard above. It’s free, it takes 10 minutes, and it’ll tell you whether you’re picking a quick win or setting yourself up for disappointment.

Got a process you’d like us to break down in a future edition? Reply and tell me. I read every response.

See you next week.

Liam Barnes

P.S. If your firm could benefit from some guidance in transitioning to an AI led firm, get in touch and book an introductory call with our Ai Transformation contusing arm.

How Did We Do?

Your feedback shapes what comes next. Let us know if this edition hit the mark or missed.